Insights from Paper (Part II) - Zanzibar: Google's Consistent, Global Authorization System

In the first part of the post, I introduced Zanzibar and covered its Model, Language, and API. In this part, we will cover architecture, implementation, and the rest of the paper.

Implementation and Architecture

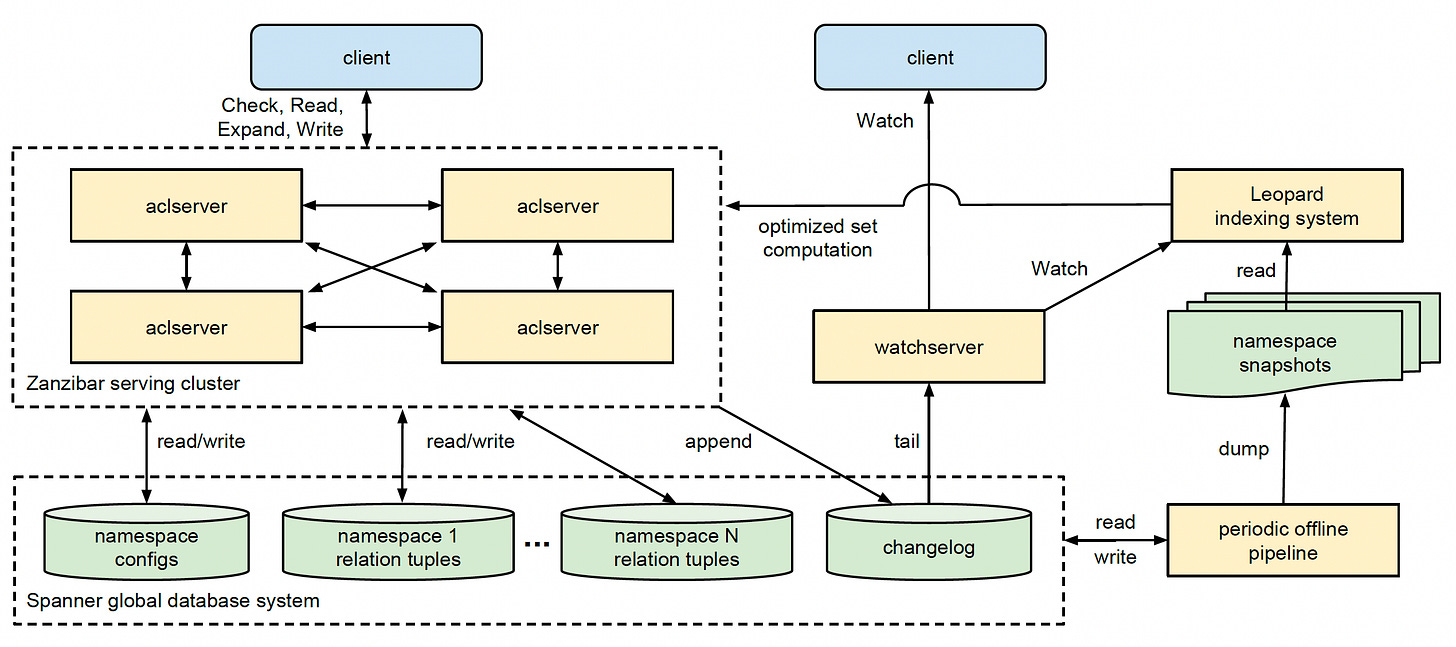

The main component in the Zanzibar system is aclserver. These servers handle check, read, write, and expand requests. See the diagram above.

The requests can come to any aclserver. It fans out the work to other servers as required. Those servers may, in turn, contact other servers to compute intermediate results. Finally, the initial server gathers the information and returns the response to the client.

There are mainly three types of databases hosted in Spanner for Zanzibar.

Per client, one database to store relation tuples.

One database to store all namespace configurations across all clients.

One changelog database to store all changes across all namespaces.

In the next section, we will discuss all these storage in detail.

The other important component is watchservers. These servers handle watch requests. Those servers use changelog to serve namespace changes.

Zanzibar runs a data processing pipeline to perform a few offline functions like below:

Produce dumps of the relation tuples in each namespace at a known snapshot timestamp.

Garbage-collect tuple versions older than a threshold configured per namespace

One more important component is an indexing system called Leopard. It optimizes operations on large and deeply nested sets.

Storage

Relation Tuple Storage

I have mentioned that the relation tuples for each namespace are stored in a separate database.

The row's primary key is (shard ID, object ID, relation, user, commit timestamp).

Clients do sharding of namespaces as per their data pattern. It can be based on object ID alone or by combining object ID and user.

Changelog Storage

The changelog database stores the history of tuple updates.

The row's primary key is (changelog shard ID, timestamp, unique update ID).

The changelog shard ID is randomly selected for each write.

Watch API consumes this data.

For consistency, each write is committed to the relation tuple storage and changelog in a single transaction.

Namespace Config Storage

This database has two tables:

A Config table to store all namespace config, and the primary key is namespace ID.

A Config update table to store all changelog of config updates, and the primary key is a timestamp.

This two-table structure helps load all configs upon startup and monitor the changelog to refresh configs continuously.

Serving

Evaluation Timestamp

Each server tracks the frequency of out-of-zone reads for data at a default staleness, fresher data, and staler data. Zanzibar uses these frequencies to compute a binomial proportion confidence interval of the probability.

This way, Zanzibar knows the probability that any given data is available locally at each staleness.

When enough data is computed, the server checks to see if each staleness value has a sufficiently low probability of incurring an out-of-zone read.

If yes, Zanzibar updates the default staleness bound to the lowest “safe” value.

If no, Zanzibar uses a two-proportion z-test to see if increasing the default will be a statistically significant amount safer.

Just a note here - This default staleness mechanism is purely a performance optimization, and does not violate consistency semantics.

Config Consistency

Changes to namespace configs can change the results of ACL evaluations.

So the question is how Zanzibar maintains consistency.

Zanzibar chooses a single snapshot timestamp for config metadata when evaluating each client request. All aclservers in a cluster use this timestamp for this request or any subrequests that fan out from it.

Each aclserver independently loads namespace configs from storage continuously. It means the aclserver has access to a different range of config timestamps.

A monitoring job tracks and aggregates the timestamp range available to every aclserver. This job reports the globally available range to every other server. On each incoming request, the server picks a time from this range.

Check Evaluation

Zanzibar evaluates ACL checks by converting check requests to boolean expressions.

Each Userset rewrite rule are also translated to boolean expressions as part of check evaluation.

Let’s take a simple example to understand the process. Checking a user U against a userset ⟨object#relation⟩ can be expressed as below (there is no userset rewrite rule here for simplicity):

Let me read out this for you in plain English. The original check is first converted for each tuple with user U. Since this user U may have membership in indirect ACLs and groups. We are supposed to find all tuples $U^1$ recursively and do the same check.

We have a problem if the indirect ACLs and groups are too deep or too wide. The solution is covered in the Leopard Indexing system.

To reduce the latency of checks, Zanzibar evaluates all leaf nodes of the boolean expression tree concurrently.

To evaluate leaf nodes, Zanzibar needs to read databases. Zanzibar applies a pooling mechanism to group reads for the same ACL check to minimize the number of read calls to Spanner.

Leopard Indexing System

In the previous section, we saw that Zanzibar would face difficulty in maintaining low latency if a user's indirect ACLs and groups were too deep or too wide. Zanzibar solves this problem using Leopard, a specialized index that supports efficient set computation.

A Leopard index represents a collection of named sets using (T, s,e) tuples. T is an enum representing the set type, and s, and e are 64-bit integers representing the set ID and the element ID, respectively.

Zanzibar represents group membership with two set types, GROUP2GROUP and MEMBER2GROUP. These are functions mapping from a set ID to element IDs:

GROUP2GROUP(s)→{e}, where s represents an ancestor group, and e represents a descendent group directly or indirectly a sub-group of the ancestor group. • MEMBER2GROUP(s) → {e}, where s represents an individual user and e represents a parent group in which the user is a direct member.

To evaluate whether user U is a member of group G, check whether the intersection of MEMBER2GROUP(U) and GROUP2GROUP(G)) is empty or not.

The Leopard system consists of three parts:

A serving system that is capable of consistent and low-latency operations across sets.

An offline, periodic index-building system.

A real-time online layer capable of continuously updating the serving system as tuple changes occur.

Index tuples are stored as ordered lists of integers in a structure, such as a skip list, thus allowing for efficient union and intersections among sets.

Example: A intersection B, requires only O(min(|A|, |B|)) skip-list seeks.

The index is sharded by element IDs and can be distributed across multiple servers. Shards are usually served entirely from memory.

The offline index builder generates index shards from a snapshot of Zanzibar relation tuples and configs and replicates the shards globally.

The Leopard servers continuously watch for new shards and swap old shards with new ones when they become available.

Leopard servers maintain an incremental layer that indexes all updates since the offline snapshot, where each update is represented by a (T, s,e, t,d) tuple, where t is the timestamp of the update and d is a deletion marker.

Updates with timestamps less than or equal to the query timestamp are merged on top of the offline index during query processing.

To maintain this incremental layer, Zanzibar uses Watch API.

A single Zanzibar tuple addition or deletion may yield tens of thousands of discrete Leopard tuple events. Each Leopard serving instance receives the complete stream of these Zanzibar tuple changes through the Watch API.

Handling Hot Spots

Zanzibar found the handling of hot spots to be the most critical frontier for low latency and high availability.

Zanzibar servers in each cluster form a distributed cache for both reads and check evaluations.

Cache entries are distributed across Zanzibar servers with consistent hashing.

Zanzibar fans out requests to the corresponding Zanzibar servers to process checks or reads via an internal RPC interface. To minimize the number of internal RPCs, Zanzibar computes the forwarding key from the object ID for most namespaces.

To handle hot forwarding keys, we cache results at both the caller and the callee of internal RPCs.

To handle the “cache stampede” problem, Zanzibar maintains a lock table on each server to track outstanding reads and checks. Only one request will begin processing among requests sharing the same cache key.

Performance Isolation

Zanzibar uses the following isolation mechanisms to ensure that performance problems are isolated.

First, to ensure proper CPU capacity allocation, Zanzibar measures each RPC's cost in terms of generic cpu-seconds.

Each Zanzibar server also limits the number of outstanding RPCs to control memory usage.

Zanzibar limits the maximum number of concurrent reads per (object, client) and per client on each Spanner server.

Zanzibar uses different lock table keys for requests from different clients to prevent any throttling from Spanner.

Tail Latency Mitigation

Zanzibar’s distributed processing requires measures to accommodate slow tasks.

Zanzibar places at least two replicas of the Spanner and Leopard indexers in every geographical region with Zanzibar servers.

Zanzibar relies on request hedging for Spanner and for the Leopard indexer.

Related Work

Multics supports ACLs on segments and directories.

Taos OS supports compound principals that incorporate how an identity has been transformed as it passes through a distributed system.

Role-based access control (RBAC), first proposed in this paper, introduced the notion of roles similar to Zanzibar relations.

Roles can inherit from each other and imply permissions. Several Zanzibar clients have implemented RBAC policies on top of Zanzibar’s namespace configuration language.

Google’s Cloud IAM system is built as a layer on Zanzibar’s ACL storage and evaluation system.

Conclusion

Zanzibar unifies access control data and logic.

Zanzibar has a simple and flexible data model. It also has support for configuration language. Both of them combined give the power to create various access control policies.

Zanzibar’s consistency model is awesome. It respects the ordering of user actions while authorization checks are being evaluated at distributed locations without global synchronization.

Zanzibar evaluates deeply or widely nested group membership with the Leopard indexing system.

Zanzibar combines a distributed cache with a mechanism to deduplicate in-flight requests. It thus mitigates hot spots.

All these measures together result in a system that scales to trillions of access control rules and millions of authorization requests per second.

References

Zanzibar paper: https://research.google/pubs/pub48190.pdf

Cache stampede: https://en.wikipedia.org/wiki/Cache_stampede

Google Cloud IAM: https://cloud.google.com/iam/

Google Cloud Spanner: https://cloud.google.com/spanner/

Multics: https://dl.acm.org/doi/pdf/10.1145/361011.361067

Taos OS : https://dl.acm.org/doi/pdf/10.1145/173668.168640

RBACK: https://arxiv.org/pdf/0903.2171