Insights from Paper - The Design of a Practical System for Fault-Tolerant Virtual Machines

1. Abstract

This paper discusses implementing a commercial enterprise-grade system that provides fault-tolerant virtual machines.

The system is based on the approach of replicating the execution of a primary virtual machine (VM) via a backup virtual machine.

The team designed a complete system in VMware vSphere 4.0 that is easy to use, runs on commodity servers, and reduces the performance of real applications by less than 10%.

The data bandwidth needed to keep the primary and secondary VM executing in lockstep is less than 20 Mbit/s.

The team has created extra components beyond replicated VM execution to address many practical issues.

2. Introduction

Primary/backup is a common approach to implement fault-tolerant servers. In this approach, backup is always available to take over if the primary server fails.

The state of the backup server must be kept nearly identical to the primary server at all times.

One way of replicating the state on the backup server is to ship changes to all states of a primary to the backup continuously. In this approach, the bandwidth needed to transfer the data is enormous.

Another approach to achieve this with less bandwidth is state machine replication. The idea is to model the servers as deterministic state machines that are kept in sync by starting them from the same initial state and applying the same input request in the same order.

The main problem with this approach is handling non-deterministic operations. Extra coordination work is needed to ensure that a primary and backup are kept in sync for these operations.

If physical servers are concerned, implementing coordination for non-deterministic operations is challenging. However, a virtual machine running on top of a hypervisor is an excellent platform for implementing state-machine duplication.

A virtual machine can be considered a well-defined state machine. Its operations are the operations of the machine being virtualised.

The hypervisor has full control over the execution of virtual machines. Thus, the hypervisor can capture all the necessary information about non-deterministic operations on the primary VM and replay these operations correctly on the backup virtual machine.

In this approach, low bandwidth is required so the replicated virtual machine can run on a physical machine that is available on the campus in place of the same building.

The VMware vSphere 4.0 platform runs fully virtualized x86 virtual machines and provides fault tolerance for all x86 operating systems and applications.

The team uses deterministic replay technology to record the execution of a primary and ensure that the backup executes identical instructions.

VMware vSphere Fault Tolerance (FT) is based on this deterministic replay but adds necessary extra protocols and functionality to build the complete fault-tolerant system.

The system not only provides fault tolerance but also automatically restores redundancy after failure.

It starts a new backup virtual machine on any available server in the local character.

As a writing of this paper, the system supports only Uni processor, virtual machines.

3. Basic FT Design

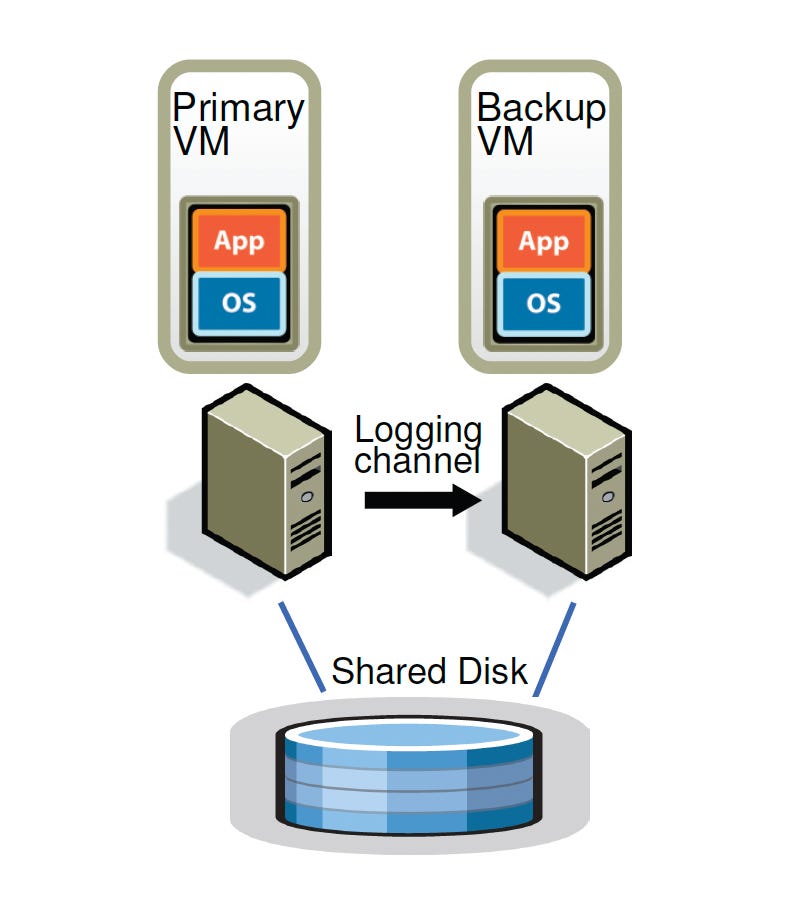

The diagram above displays the basic steps of the system for all versions.

A backup VM runs on a different physical server for each VM for which we want to provide falt-tolerance. It is said that these two VMs are in virtual lock-step.

The virtual disc for both the VMs is on shared storage.

Only the primary VM advertises its presence on the network. All other inputs (such as keyboard and mouse) go to the primary VM only.

All the inputs of the primary VM are sent to the backup via a network connection called a logging channel.

There are extra steps or information transmitted to take care of non-deterministic operations.

In summary, the backup VM always executes identically to the primary VM.

The primary and backup VM follow a specific protocol to ensure no data is lost if the primary fails.

The system detects if a primary or backup VM has failed using a combination of heartbeat and monitoring the traffic on the logging channel.

The system also ensures that only one of the primary or backup VMs handles the execution. Even in the split-brain case, only one server handles the execution.

3.1 Deterministic Replay Implementation

There are three challenges for replicating the execution of any VM running on any operating system.

It is required to capture all the input and non-deterministic operations correctly.

It is required to correctly apply the input and non-deter operations output to the backup virtual machine.

It is required to do the tasks in a way so that performance does not degrade.

VMware deterministic records the inputs of a VM and all possible non-determinism associated with the VM execution in a stream of log entries written to a log file.

For non-deterministic events, such as timer, IO completion, or interrupt, the exact instruction at which the event occurred is also recorded.

These events are delivered during the reply at the same point in the instruction history.

3.2 FT Protocol

For VMware Fault tolerance, the team used deterministic replay to produce the necessary log entries to record the exclusion of the primary virtual machine.

It does not write the log entries to disk but sends them to the backup VM via the logging channel.

The backup VM replays the entries in real time and hence executes identically to the primary VM.

The most crucial point is that the system must augment the login entries with a strict fall tolerance protocol on the login channel to ensure that we achieve fall tolerance. So, the fundamental requirement is as follows.

Output Requirement: If the backup VM ever takes over after the primary fails, it will continue executing in a way that is entirely consistent with all the outputs that the primary VM sent to the external world.

It simply means the necessary condition is that the backup VM must have received all log entries generated before the output operation operation.

The easiest way to address the output requirement is to enforce that it creates a special log entry at each output operation.

Then, we can convert this output requirement to enforce a specific output rule.

Output Rule: The primary VM may not send an output to the external world until the backup PM has received and acknowledged the log entry associated with the operation producing the output.

So it is very simple: If the backup VM has received all the log entries, including the log entry for the output-producing operation, then it will be able to reproduce the state of the primary VM exactly at the output point.

It is important to note that the output rule does not put any condition around stopping the excuse of the primary VM. It only requires a delay in sending the output. This means that the VM itself can continue executing.

The diagram below is a chart about the requirements of the fall tolerance protocol.

It shows a timeline of events on the primary and backup VMs.

3.3 Detecting and Responding to Failure

If the primary VM fails, the backup VM should go live. However, there may be a lag in the exclusion of the steps at backup.

It is possible that the backup VMs have a number of log entries received and acknowledged but have not yet been consumed.

The backup VM must continue replaying its execution from the log entries until it has consumed the last long entry.

At this point, the backup will stop replaying mode and start executing as a normal VM.

The new primary VM will start producing output to the external world.

During the transition, some device-specific operations may be required to allow the output to occur properly. For example, the Mac address of the new primary VM on the network has to be advertised so that the physical network switch will know the server.

FT uses UDP heartbeats between servers running fault-tolerant VMs to detect if a server may have crashed.

FT also monitors the logging traffic sent from the primary to the backup VM and the acknowledgment sent from the backup to the primary VM to detect failure.

Whatever method we use for failure detection, they are always susceptible to a split-brain problem. So, only one of the primary or backup VMs must go live.

To avoid the split-brains problem, the team uses shared storage that stores the virtual disc of the VM.

When either a primary or a backup VM wants to go live, it executes an atomic test and operation on the shared storage. If the operation succeeds, then the other VM is allowed to go live.

One last thing: FT automatically restores redundancy by starting the new backup version on another host.

4. Practical Implementation of FT

4.1 Starting and Restarting FT VMs

We need a critical component in the system that helps in starting a backup VM in the same state as a primary VM.

This same component can be used to restart a backup VM after a failure has occurred.

VMware vSphere has VMotion functionality. It allows the migration of a running VM from one server to another server with minimal disruption. The VM pause times are typically less than a second.

The medicine of our running from another with deception. Usually, the pause time in this scenario is less than a second.

The team created a modified version of VMotion, which creates an exact copy on a remote server but it does not destroy the current VM in the local server.

This feature also sets up a logging channel to the remote server. The source VM starts logging more as the primary, and the destination VM enters in the replay mode as the new backup.

vSphere implements a clustering service that maintains management and resource information. When a failure occurs and a primary VM needs a new backup VM to re-establish redundancy, the primary VM informs the clustering service that it needs a new backup.

The clustering service determines the best server on which to run the backup VM based on the available resources. Normally, it takes a few minutes after a server failure for the new VM to become available.

4.2 Managing the Logging Channel

The hypervisors maintain a large logging buffer for log entries. The primary VM executes and produces log entries into the log, and the backup VM consumes log entries from the lock buffer.

The content of the primary VM’s log buffer is flushed out to the logging channel as soon as possible, and the log entries are read into the backup’s log buffer from the logging channel as soon as they arrive.

The backup VM sends an acknowledgment back to the primary each time it reads some log entries from the network into its log buffer.

If the backup VM encounters an empty log buffer when it reads the next log entry, It will stop until a new log entry age available. Similarly, if the primary VM encounters the full log buffer when it needs to write a log entry, it must stop execution until the log entries can be flushed out.

The team has implemented an additional mechanic to slow down the primary VM so that the backup VM is not too far behind. Additional information is sent to determine the real-time execution delay between the VMs.

Typically, the execution lag is less than 100 milliseconds. If the backup VM starts having significant lag, VMware FT starts slowing down the primary by informing the schedule to give a slightly smaller amount of the CPU to the primary VM.

4.3 Operation on FT VMs

Another important matter to deal with is the control operations. For example, if the primary medium is accidentally powered off, the backup VM must also be stopped. For such control operations, special entries are sent from the primary to the backup on the logging channel.

The only operation that can be done independently on the primary and backup VMs is VMotion.

4.4 Implementation issue for Disk IOs

Let's start understanding the implementation issues related to the disk IOs.

Normally, disk operations are non-blocking, so they can be executed in parallel. However, this can cause non-determinism. The solution for this problem is to detect any such IO races and force them to run sequentially.

A disk operation can also race with memory access by an application or OS in a VM. There can be a non-deterministim if an application or OS in a VM is reading a memory block at the same time a disc read is occurring to the block.

One solution is to set up page protection temporarily on the pages that are targets of desktop operations.

There are issues associated with disk IOs that are outstanding (not completed) on the primary VM when a failure happens, and the backup takes over.

The newly promoted primary cannot be sure if the disk IO issued was completed successfully. The team solved this by re-issuing the pending iOS during the backup PM's go-live process.

4.5 Implementation Issues for Network IO

VMware vShphere provides many performance optimizations for VM networking.

VMware FT network disables asynchronous network optimizations.

The elimination of the asynchronous updates on the networking, combined with the delay in sending the package, has provided good performance.

The team has implemented clustering optimization to reduce VM traps and entraps. Reducing the delay for the transmitted target is another performance optimism for networking.

5. Design Alternatives

5.1 Shared vs. Non-shared Disk

In the design, the primary VM and backup VM share the same virtual disc. The disc is considered external communication. So, only the primary VM actually connects to the desk, and because of this, the right shared risk must be delayed as per the output rule.

An alternate design is for the primary VM and backup VM to have separate non-shared disks. In this design, the backup VM writes all disc writes to its virtual desk, and the content of its virtual disk is in sync with the content of the primary VM virtual disk.

The disadvantage of the non-share design is that the two copies of the virtual disk must be explicitly synced up.

5.2 Executing Disk Reads on the Backup VM

In the default design, the backup VM never reads from the virtual disk.

An alternate design can be where the backup VM executes the disk reads and, therefore, eliminates the login of disc read data. This approach can significantly reduce the traffic on the logging channel for workloads that do a lot of disc writes.

There are many problems associated with it. This may slow down the backup VMs. Some extra work must be done to deal with the failed disc reads.

6. Related Work

Bressoud and Schneider described the initial idea of implementing fault tolerance for virtual machines via software contained completely at the hypervisor level.

Napper and Friedman describe implementations of fault-tolerant Java virtual machines.

Dunlap describes an implementation of deterministic replay targeted towards debugging application software on a paravirtualized system.

7. Conclusion and Future Work

The team has designed and implemented an efficient and complete system in VMware vSphere that provides fault tolerance (FT) for virtual machines running on servers in a cluster.

The performance of fault-tolerant VMs under VMware FT on commodity hardware is excellent, and for some typical applications, there is less than 10% overhead.

Currently, deterministic replay has only been implemented efficiently for uni-processors. This needs to be expanded.

References

Bressoud and Schneider: Hypervisor-based Fault-tolerance

Napper and Friedman: A Fault-Tolerant Java Virtual Machine