Insights from paper(part I) - Manu: A Cloud Native Vector Database Management System

Data science and AI applications need to manage high-dimensional vector data. Embedding vectors are widely used for analyzing and searching unstructured data.

The vector collections are exceeding billion-scale, so there is a need for fully managed and horizontally scalable vector databases. These databases should have long-term evolvability, tunable consistency, good elasticity, and high performance.

Manu is a cloud-native vector database that implements all these features. Integrating these features into a relational database is challenging if you follow the traditional DBMS design rules.

Manu relaxed the data model, and consistency constraints as vector data do not require these.

Manu does three critical things:

It exposes the write-ahead log (WAL) and binlog as backbone services.

Its write components are designed as log publishers, while all read-only analytic and search components are designed as independent subscribers to the log services.

It utilizes multi-version concurrency control (MVCC) and a delta consistency model to simplify the communication and cooperation among the system components.

Because of these design choices, Manu has low coupling among the system components.

One more critical thing Manu does — It optimizes for performance and usability with hardware-aware implementations.

Manu is used for many applications like recommendation, multimedia, language, medicine, and security.

Introduction

One IDC report mentions that unstructured data, such as text, images, and video, comprised about 80% of the 40,000 exabytes of new data generated in 2020.

With the rise of large learning-based embedding models (LLMs), embedding vectors to manage unstructured data has become commonplace in many applications, such as e-commerce, social media, and drug discovery.

One of the core features of these applications is that they encode the semantics of unstructured data into a high-dimensional vector space.

Once our data is represented in vectors, recommendations, search, and analysis can be implemented via similarity-based vector search.

A few specialized vector databases are available in the market, like Pinecone, Qdrant, Vald, Vespa, Weaviate, and Milvus. Milvus was the previous vector database created by the Manu team. It was open-sourced in 2019 under the LF AI & Data Foundation.

From then, the team collected feedback from more than 1200 industry users and found that some of the design principles Milvus adopted needed to be more suitable.

Manu was built from scratch with a focus on a cloud-native architecture. It is designed keeping below points in consideration:

Support for complex transactions is not necessary.

A tunable performance-consistency trade-off is important.

High hardware cost calls for fine-grained elasticity.

So vector databases should have tunable consistency, functionality-level decoupling, and per-component scalability.

This can be achieved by relaxing the transaction complexity of RDBMS, which is not required in the same spirit here.

The crux of this paper is — Manu follows the “log as data” paradigm.

Manu structures the entire system as a group of log publish/subscribe microservices.

The write-ahead log (WAL) and inter-component messages are published as “logs.” Read-side components, such as search and analytical engines, are all built as log subscribers.

This architecture decouples system functionalities which was one of the core requirements.

Each log entry is assigned a globally unique timestamp. Like Apache Flink has watermarks, Manu has a unique log entry called time-tick which is periodically inserted into each log channel. It signals that event time for log subscribers is in progress.

This timestamp and time-tick form the basis of the tunable consistency mechanism and multi-version consistency control(MVCC), the second core requirement.

Manu is extensively optimized for performance and usability.

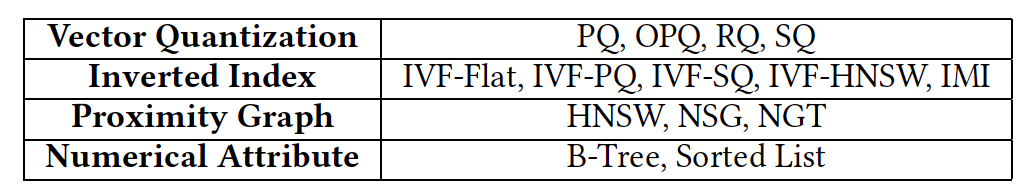

Manu supports various indexes for vector search, including vector quantization, inverted index, and proximity graphs.

Manu’s implementation is tailored to better utilize the parallelization capabilities of modern CPUs and GPUs, along with the improved read/write speeds of SSDs over HDDs.

Manu has built a visualization tool to track performance in real-time. It includes an auto-configuration tool to recommend indexing algorithm parameters using machine learning.

This is quite a big introduction to Manu. To summarize, the paper makes the following contributions:

Feedback from 1200 industry users over three years has been incorporated into the design and shows how vector database application requirements differ from relational databases.

Manu’s key architectural designs as a cloud-native vector database. It is built by the core design philosophy of relaxing transaction complexity in exchange for tunable consistency and fine-grained elasticity.

It includes usability and performance-related enhancements, e.g., high-level API, a GUI tool, automatic parameter configuration, and SSD support.

Background and Motivation

Let’s start with the goal of video recommendation to help users discover new videos based on their personal preferences and previous browsing history.

Using deep neural networks (machine learning models), features of users and videos, such as search history, watch history, age, gender, video language, and tags, are converted to embedding vectors.

These models are carefully designed and trained to encode the similarity between user and video vectors into a common vector space.

Now the task of video recommendation is to retrieve the candidate videos from the collection of video vectors via similarity scores. The score is calculated with respect to the specified user vector.

This system has to be dynamic as new videos are added, and old videos are deleted.

This video recommendation and other applications of vector databases can involve hundreds of billions of vectors with daily growth at a hundred-million scale.

Now imagine serving million-scale queries per second (QPS), existing relational, NoSQL, and NewSQL are not built to manage vector data on that scale.

There are a few fundamental different things in the vector databases.

The architecture and theory of vector databases are not mature. There is a need to manage constant evolution.

Complex ACID transactions are unnecessary for vector databases.

Vector database applications need a flexible performance-consistency trade-off.

Vector databases have more stringent and diversified hardware requirements.

Here operations are computation-intensive, so hardware accelerators such as GPUs are critical.

Accesses to vector data generally have poor locality, so we need more RAM.

Different applications vary significantly in their resource demands.

We have covered a lot of things around the background of vector databases. Now the question is, what is at the core of a vector database?

Core functionalities of a vector database include data insertion, indexing, filtering, and vector search.

Let’s understand from our use case of video recommendation. It requires online insertion and high concurrency vector search.

Take another example of drug discovery. In this case, offline data ingestion and indexing are acceptable.

By now, we are in good shape to define the design goal of Manu or in-general for a vector database. Those are the followings:

Long-term evolvability

Tunable consistency

Good elasticity

High availability

High performance

Strong adaptability

The Manu System

It is time to dive deep into Manu’s architecture and system design.

Schema, Collection, Shard, and Segment

Schema: The basic data types are vector, string, boolean, integer, and floating point.

Suppose the entity shown in the above diagram presents a product on an e-commerce platform.

The Primary key is the ID of the entity. It can either be an integer or a string. The user can define it, or Manu will add an integer primary key.

The Feature vector is the embedding of the product. The Label is the product category, such as food, book, and cloth. The Numerical attribute is a float or an integer associated with the product, such as price, weight, or production date.

Manu supports multiple labels and numerical attributes in each entity. These fields are used only for filtering.

The Logical sequence number (LSN) is a system field hidden from users.

Collection: A Collection is a set of entities. It has no relation with any other collection.

For example, a collection can contain all the products of an e-commerce platform.

Shard: A Shard is an insertion/deletion channel. Entities are hashed into multiple shards based on their primary keys during insertion/deletion.

Manu’s data placement unit is segment rather than shard.

Segment: Entities from each shard are organized into segments. A segment can be in either a growing or sealed state.

Sealed segments are read-only. Growing segments can accept new entities.

A growing segment will switch to a sealed state when it reaches a predefined size or if a period of time has passed without an insertion.

Manu merges small segments into larger ones for search efficiency.

System Architecture

Manu has a service-oriented design. It has the following four layers:

Access layer, coordinator layer, worker layer, and storage layer

Access layer: It consists of stateless proxies that serve as the user endpoints.

This layer receives requests from clients and distributes the requests to the corresponding processing components. It aggregates partial search results before returning them to clients.

This layer caches a copy of the metadata to verify the legitimacy of search requests Search request verification is lightweight, and moving it to the proxies has two key benefits.

The requests that fail verification are rejected early.

It reduces the number of routing hops for requests.

Coordinator layer: This layer manages system status, maintains collections metadata, and coordinates the system components for processing tasks.

There are four coordinators.

The root coordinator handles data definition requests, such as creating/deleting collections, and maintains meta-information of the collections.

The data coordinator records detailed collection information and coordinates the data nodes to transform data update requests into binlogs.

The query coordinator manages the status of the query nodes and adjusts the assignment of segments (along with related indexes) to query nodes for load balancing.

The index coordinator maintains meta-information of the indexes (e.g., index types and storage routes) and coordinates index nodes in index-building tasks.

A coordinator can have multiple instances for reliability. A collection can be served by separate coordinator instances for throughput.

Worker layer: This layer conducts the actual computation tasks.

The worker nodes are stateless in this layer. They fetch read-only copies of data to conduct tasks and do not need to coordinate with each other.

Different worker nodes are used for different tasks. For example, query nodes for query processing, index nodes for index building, and data nodes for log archiving.

Each worker type can scale independently.

This design also achieves resource isolation as different computation tasks require different QoS.

Storage layer: This layer persists system status, metadata, collections, and associated indexes.

Manu uses etcd (a key-value store) to host system status and metadata for the coordinators. Etcd provides high availability with its leader election mechanism for failure recovery.

When metadata is updated, the updated data is first written to etcd and then synchronized to coordinators.

The volume of other data (e.g., binlog, data, index) is large. Manu uses AWS S3 for persistence due to its high availability and low cost.

Storage engines, including AWS S3, MinIO, and Linux file systems, are currently supported.

An important note here — High latency of object storage is not a performance bottleneck. The worker nodes conduct computation tasks on in-memory, read-only copies of data.

The Log Backbone

Manu’s backbone is a log system. Manu exposes the write-ahead log (WAL) and binlog as backbone services.

The binlog is the base part. WAL is the incremental part.

These services complement each other in delay, capacity, and cost.

Loggers are entry points for publishing data onto the WAL.

Data nodes subscribe to the WAL and convert the row-based WALs into column-based binlogs.

Manu records all the requests that change the system state to the log, including data definition requests, data manipulation requests, and system coordination messages.

Manu uses logical logs instead of physical logs. Logical logs focus on event recording.

The above diagram shows the detailed architecture of the log system. The diagram covers the part related to insert requests only.

The loggers are organized in a hash ring, and each logger handles one or more logical buckets in the hash ring based on consistent hashing.

Each shard corresponds to a logical bucket in the hash ring and a WAL channel.

Each entity in insert requests is hashed to a shard (and thus channel) based on their ID.

When a logger receives a request, it does the following things:

Verify the legibility of the request.

Assign an LSN for the logged entity by consulting the central time service oracle (TSO).

Determine the segment the entity should go to, and write the entity to WAL.

Writes the mapping of the new entity ID to segment ID into a local LSM tree.

Flushes the incremental part of the LSM tree periodically to object storage. It keeps the entity to segment mapping using the SSTable format of RocksDB.

The WAL is row-based and reads in a streaming manner for low delay and fine-grained log pub/sub. WAL is implemented via a cloud-based message queue such as Kafka or Pulsar.

There are multiple logical channels for the WAL to prevent different types of requests from interfering with each other.

Data nodes subscribe to the WAL and convert the row-based WALs into column-based binlogs.

It means values from the same field from the WALs are stored together in a column format in binlog files. It helps in reading per-field values in batches.

Inter-component messages are also passed via logs. For example, Data nodes announce when segments are written to storage, and index nodes announce when indexes have been built.

Tunable Consistency

In Manu, data seen by a query can be stale for up to delta time units with respect to the time of the last data update. This is called the delta consistency model. This “staleness tolerance” is given in virtual time and is specified by the user.

In practice, users can define this temporal tolerance as physical time of 10 sec.

Manu uses a hybrid logical clock in the TSO to generate timestamps. These assign LSN to each request which is exceptionally close to physical time.

Each timestamp has two components: a physical component that tracks physical time and a logical component that tracks event order.

The physical component indicates the physical time when Manu received the request. Multiple events may happen at the same physical time unit so logical component help in that case.

For a log subscriber, it needs to know the following three things.

The user-specified staleness tolerance 𝜏

The time of the last data update

The issue time of the search request

1 and 3 are simple. How to calculate the second point?

Manu has a time-tick mechanism. Some special control messages, called time-ticks, are periodically inserted into each log channel. They denote the latest time-tick a subscriber consumed as 𝐿𝑠 and the issue time of a query as 𝐿𝑟. If 𝐿𝑟 − 𝐿𝑠 < 𝜏 is unsatisfied, the log subscriber must wait for the next time-tick.

This delta consistency model beautifully parametrizes strong consistency and eventual consistency. Consistency is strong if the delta is 0. When the delta is infinite, the consistency is eventual.

The paper claims that, to the best of their knowledge, they are the first to support delta consistency in a vector database.

Index Building

It is apparent that we can not scan the whole database for a search, so there is a need for an index.

Manu supports the following index for vector search.

Let’s talk briefly about these indexes. You can refer to references for deep dive. Each is a separate world in vector similarity search for Artificial Intelligence.

Vector quantization (VQ) methods compress vectors to reduce memory footprint and the costs for vector distance/similarity computation. Scalar quantization (SQ) maps each dimension of a vector (data types typically are int32 and float) to a single byte.

Read paper:

Optimized product quantization for approximate nearest neighbor search Similarity search in high dimensions via hashing

Scalar quantization for large scale image search

Inverted indexes group vectors into clusters and only scan the most promising clusters for a query.

Read paper:

Compression of inverted indexes for fast query evaluation

Proximity graphs connect similar vectors to form a graph, and achieve high accuracy and low latency at the cost of high memory consumption

Read paper:

Fast approximate nearest neighbor search with the navigating spreading-out graph

Optimization of indexing based on k-nearest neighbor graph for proximity search in high-dimensional data

Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs

Approximate nearest neighbor search on high dimensional data — experiments, analyses, and improvement

Besides these vector indexes, Manu also supports traditional indexes (b-tree) on the attribute field of the entities to accelerate attribute-based filtering.

Vector Search

Manu supports the following searches:

Classical vector search (we saw the index in the previous section)

Attribute filtering (using traditional indexes)

Multivector search

For classical vector search, the distance/similarity function can be Euclidean distance, inner product, or angular distance.

Attribute filtering is useful when searching vectors similar to the query subject to some attribute constraints. For example, an e-commerce platform may want to find products that interest the customer and cost less than 100$.

Multi-vector search is required when an entity is encoded by multiple vectors. For example, a product can be described by both embeddings of its image and embeddings of its text description.

Manu supports two strategies for multi-vector search and chooses the one to use according to the entity similarity function. Detailed information is available in the Milvus paper written by the team.

Manu partitions a collection into segments and distributes the segments among query nodes for parallel execution for vector search.

The proxies cache inquiries to the query coordinator and dispatch search requests to query nodes. The query nodes perform vector searches on their local segments. The proxies also cache a copy of the distribution of segments received from query coordinator.

For a top-𝑘 vector search request, the query nodes search their local segments to obtain the segment-wise top-𝑘 results. Each query node merges these results to form the node-wise top-𝑘 results. Then, the node-wise top-𝑘 results are aggregated by the proxy for the global top-𝑘 results and returned to the application.

To handle the deletion of vectors, the query nodes use a bitmap to record the deleted vectors in each segment and filter the deleted vectors from the segment-wise search results.

Users can configure Manu to batch search requests to improve efficiency.

Query nodes obtain data from three sources, i.e., the WAL, the index files, and the binlog.

For data in the growing segments, query nodes subscribe to the WAL and conduct searches using brute force.

When a segment changes from growing state to sealed state, its index will be built by an index node and stored in object storage. After that, query nodes are notified to load the index and replace the temporary index.

Query nodes access the binlog for data when the distribution of segments among the query nodes changes.

The query coordinator manages the segment distribution and monitors the query nodes for liveness and workload to coordinate failure recovery and scaling.

Manu does not ensure that segment redistribution is atomic. This does not affect correctness as the proxies remove duplicate result vectors for a query.

In the next part of the article, I will cover Manu’s feature highlights, its use case in the real-word, and the rest of the paper.

References

The original paper: https://www.vldb.org/pvldb/vol15/p3548-yan.pdf

Pinecone: https://www.pinecone.io/.

Qdrant: https://qdrant.tech/.

Vald: https://github.com/vdaas/vald.*

Vespa: https://vespa.ai/.*

Weaviate: https://github.com/semi-technologies/weaviate.*

Milvus: A Purpose-Built Vector Data Management System: Jianguo Wang, Xiaomeng Yi, Rentong Guo, Hai Jin, Peng Xu, Shengjun Li, Xiangyu Wang, Xiangzhou Guo, Chengming Li, Xiaohai Xu, et al. 2021. In Proceedings of the 2021 International Conference on Management of Data. 2614–2627.

Optimized product quantization for approximate nearest neighbor search: Tiezheng Ge, Kaiming He, Qifa Ke, and Jian Sun. 2013. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2946–2953.

Similarity search in high dimensions via hashing: Aristides Gionis, Piotr Indyk, Rajeev Motwani, et al. 1999. In Vldb, Vol. 99. 518–529.

Scalar quantization for large scale image search: Wengang Zhou, Yijuan Lu, Houqiang Li, and Qi Tian. 2012. In Proceedings of the 20th ACM international conference on Multimedia. 169–178. 3561

Compression of inverted indexes for fast query evaluation: Falk Scholer, Hugh E Williams, John Yiannis, and Justin Zobel. 2002. In Proceedings of the 25th annual international ACM SIGIR conference on Research and development in information retrieval. 222–229.

Fast approximate nearest neighbor search with the navigating spreading-out graph: Cong Fu, Chao Xiang, Changxu Wang, and Deng Cai. 2019. Proceedings of the VLDB Endowment 12, 5 (2019), 461–474.

Optimization of indexing based on k-nearest neighbor graph for proximity search in high-dimensional data: Masajiro Iwasaki and Daisuke Miyazaki. 2018. arXiv preprint arXiv:1810.07355 (2018).

Efficient and robust approximate nearest neighbor search using hierarchical navigable small world graphs: Yu A Malkov and Dmitry A Yashunin. 2018. IEEE transactions on pattern analysis and machine intelligence 42, 4 (2018), 824–836.

Approximate nearest neighbor search on high dimensional data — experiments, analyses, and improvement: Yu A Malkov and Dmitry A Yashunin. 2018. IEEE transactions on pattern analysis and machine intelligence 42, 4 (2018), 824–836.