Insights from Paper(Part II) — ZooKeeper: Wait-free coordination for Internet-scale systems

Introduction

In the previous post, I introduced ZooKeeper. We discussed the ZooKeeper service, its data model, client API and a few examples of primitives it provides for distributed system process coordination.

In this post, I will cover the rest of the ZooKeeper paper. It is used inside Yahoo! for internal and external applications. We will also discuss the high-level architecture and implementation.

ZooKeeper Applications

In this section, the paper covers a few example applications using ZooKeeper in production.

The Fetching Service (FS)

This paper is written by Yahoo! team. Yahoo! had a search engine at that time. The search engine needs to do crawling of the billions of web pages. The Fetchig Service was part of the crawler. The service has a master process and many page-fetching processes called fetchers. The master is responsible for providing configuration to the fetchers. The fetchers are responsible for fetching web pages and writing the status and health information in ZooKeeper.

FS uses ZooKeeper for two purposes:

Manage configuration — metadata of fetchers

Leader election — electing a master

Katta

Katta, a distributed indexer and non-Yahoo! application, uses ZooKeeper in production. The main feature of Katta is that it divides the indexing work using shards. A master server is responsible for assigning the shards to slave servers and tracking their progress.

Katta uses ZooKeeper for three purposes:

Group membership — Who is the master, and who are the slaves

Elect master and handle master failover (leader election)

Track and propagate shard assignments (configuration management)

Yahoo! Message Broker(YMB)

Most of you know Kafka has been using ZooKeeper for quite a long time. Yahoo! had its own distributed message broker (publish-subscribe) system. The system was managing thousands of topics distributed among a set of servers. Each topic was replicated using a backup of the primary. It ensured two machines had each message. The system has a shared-nothing distributed architecture.

YMB uses ZooKeeper for three purposes:

Configuration management — The distribution of topics

Failure detection —Deal with failures of machines in the system

Group membership— Primary and backup

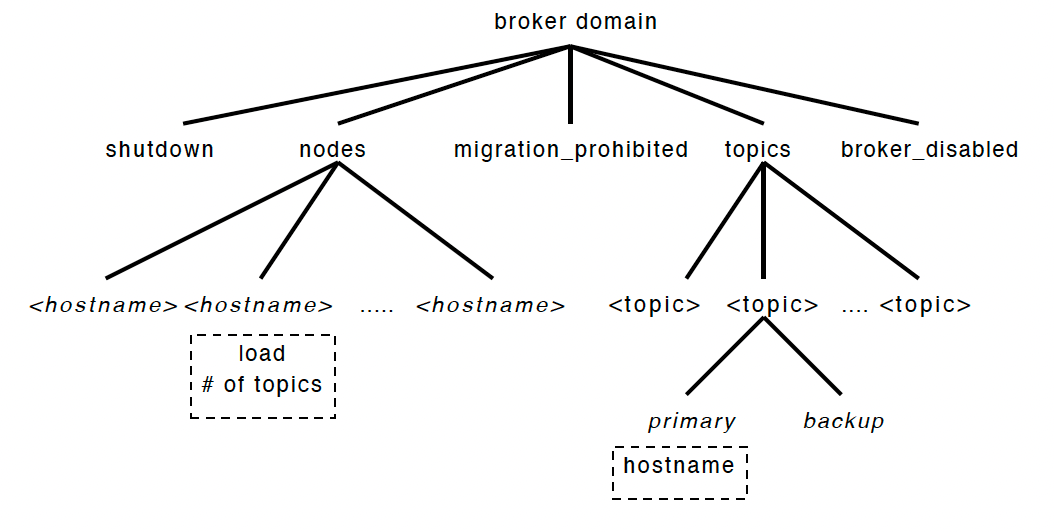

See the layout of YMB in the below diagram

Each broker domain has a lot of child znodes. The important ones are nodes and topics. The nodes directory has an ephemeral znode for each of the active servers. The topics directory has a child znode for each topic managed by YMB. These topic znodes have primary and backup child znodes.

The other child nodes (shutdown and migration prohibited etc.) are monitored by all servers. It provides centralized control of YMB.

ZooKeeper Implementation

In this section, we will talk about high-level components and their implementation in ZooKeeper. See the diagram below.

All requests come to Request Processor.

A write request needs coordination among servers. An implementation of the atomic broadcast-based protocol is used for this. The servers commit the changes to the ZooKeeper database, which is fully replicated across servers.

A read request doesn’t need any coordination. The state of the local database is read, and the response is prepared from that.

You may have a question in mind what ZooKeeper database am I talking about?

The database is an in-memory database that has the entire data tree. Refer to my previous post to learn more about the data tree. This database is fully replicated across all servers.

Each node of the data tree has a maximum size of 1 MB, so memory size is not a problem. The maximum value is configurable.

The ZooKeeper team writes log updates on the disk before they are applied to the in-memory database. It provides the required recoverability.

ZooKeepr has implemented a replay log (a write-ahead log) of committed operations and generates periodic snapshots of the in-memory database.

One important point: a write request is forwarded to a leader. The other servers, called followers, get message proposals (from the leader) that have agreed (via Zab protocol) to state change details.

Request Processor

Some servers may have applied more transactions than others. This is fine for the ZooKeeper. But why? The answer is that the ZooKeeper transactions are idempotent.

For each write request, the leader calculates what the system's state will be when the write will be applied. Leader transforms that state into a transaction.

Some outstanding transactions may still need to be applied to the database. The ZooKeeper takes those into account and calculates the future state.

For example, if a client does a conditional setData and the version number in the request matches the future version number of the znode being updated, the service generates a setDataTXN that contains the new data, the new version number, and updated time stamps.

If an error occurs, such as mismatched version numbers or the znode to be updated does not exist, an errorTXN is generated instead.

Atomic Broadcast

We discussed that all write requests are forwarded to the leader. The leader executes the request and broadcasts the change to the ZooKeeper state through an atomic broadcast protocol called the Zab. Zab uses, by default, simple majority quorums to decide on a proposal. It means ZooKeeper can only work if a majority of servers are correct.

Zab guarantees that changes broadcast by a leader are delivered in the order they were sent. Other than this, for the leader change scenario, all changes from previous leaders are delivered to an established leader before the new leader broadcasts his changes.

There are a few good implementation points that simplify the work.

The ZooKeeper uses TCP for transport, so message order is maintained

by the network, which allows it to simplify the implementation.

The ZooKeeper uses the leader chosen by Zab as the ZooKeeper leader.

The ZooKeeper uses the log to keep track of proposals as the write-ahead log for the in-memory database so that It does not have to write messages

twice to disk.

Each ZooKeeper server has a copy in memory of the ZooKeeper

state ( data tree). When a ZooKeeper server recovers from a crash, it

must recover this internal state.

ZooKeeper uses periodic snapshots, so it only requires the redelivery of

messages since the start of the snapshot for the recovery.

The ZooKeeper snapshots are called fuzzy snapshots. They do not lock

the ZooKeeper state to get created. The ZooKeeper does a depth-first scan of the data tree, atomically reading each znode’s data and meta-data and writing them to disk.

If a server crashes and recovers with a fuzzy snapshot and Zab redelivers the state changes, finally server is in full sync.

Client-Server Interactions

When a server processes a write request, it also sends out and clears notifications relative to any watch that corresponds to that update.

One important point is that servers process writes in order and do not concurrently process other writes or reads.

Each read request is processed locally by the server and tagged with a zxid that corresponds to the last transaction seen by the server. Since read are processed by the server locally, the ZooKeeper can deliver excellent performance for read-dominant workloads.

There is a problem with this approach. The read operation may return a stale value. The ZooKeeper has solved this problem by providing a sync call.

To guarantee that a given read operation returns the latest updated value, a client calls sync followed by the read operation.

The sync call is executed asynchronously and is ordered by the leader after all pending writes to its local replica.

In this way, the FIFO order guarantee of client operations and the global guarantee of the sync enable the result of the read operation to reflect any changes before the sync was issued.

The ZooKeeper servers responses include the zxid that the response

is relative to. Heartbeat messages during intervals of no activity include the last zxid seen by the server that the client is connected to.

If the client connects to a new server, that new server ensures that its view of the ZooKeeper data is at least as recent as the client's view by checking the client's last zxid against its last zxid.

If the client has a more recent view than the server, the server does not reestablish the session with the client until the server has caught up.

The ZooKeeper uses timeouts to detect client session failures. The leader determines that there has been a failure if no other server receives anything from a client session within the session timeout.

If the client cannot communicate with a server to send a request or

heartbeat, it connects to a different ZooKeeper server to re-establish its session.

Related work

The ZooKeeper has taken learning from a lot of other systems. A few of them are mentioned here.

Zab is the atomic broadcast protocol on which the ZooKeeper is implemented.

A distributed lock service for transactional applications is discussed in this paper.

Chubby proposes a system to manage advisory locks for distributed applications.

The ISIS system transforms abstract-type specifications into fault-tolerant distributed objects.

This paper talks about building fault-tolerant services using state-machine replication as ZooKeeper does.

Paxos is an algorithm that enables efficient implementations of replicated state machines for asynchronous systems.

Conclusions

The ZooKeeper solves a few core problems of coordinating processes in distributed systems. The good part is that it takes a wait-free approach for solving the problems.

The ZooKeeper achieves throughput values of hundreds of thousands of operations per second for read-dominant workloads by using fast reads with watches, both of which are served by local replicas.

References

Previous post (part I)on ZooKeeper:

Original paper: https://www.usenix.org/legacy/event/atc10/tech/full_papers/Hunt.pdf

Zab: http://www.cs.cornell.edu/courses/cs6452/2012sp/papers/zab-ieee.pdf

Chubby: https://research.google.com/archive/chubby-osdi06.pdf

ISIS: https://www.cs.swarthmore.edu/~newhall/readings/isis.pdf

Fault-Tolerance using state machine: https://www.cs.cornell.edu/fbs/publications/SMSurvey.pdf

Paxos: https://lamport.azurewebsites.net/pubs/paxos-simple.pdf