Insights from Paper- Google Dremel: Interactive Analysis of WebScale Datasets

Introduction

Google’s Dremel is a scalable, interactive ad-hoc query system for analyzing read-only nested data. It is capable of running aggregation queries over trillion-row tables in seconds.

The system scales to thousands of CPUs and petabytes of data and has thousands of users at Google.

Large-scale analytical data processing has become widespread in web companies and across industries. Performing interactive data analysis at scale demands a high degree of parallelism.

The data used in web and scientific computing is often nonrelational. Hence, a flexible data model is essential in these domains.

A nested data model underlies most structured data processing at Google and other major web companies.

Dremel is capable of operating on in situ nested data. In situ refers to the ability to access data ‘in place.’ For example, Data can be in GFS or BigTable. See my post on GFS and Bigtable papers to learn more about these.

Dremel can execute many queries over such data that would ordinarily require a sequence of MapReduce jobs but at a fraction of the execution time.

Dremel is not intended to replace MapReduce but is used in conjunction.

Dremel has been in production since 2006. A lot of instances of Dremel are deployed within Google. The range of each instance is from ten to thousands of nodes. A few example systems are listed below:

Analysis of crawled web documents.

Tracking install data for applications on Android Market.

Crash reporting for Google products.

OCR results from Google Books.

Spam analysis.

Debugging of map tiles on Google Maps.

Tablet migrations in managed Bigtable instances.

Results of tests run on Google’s distributed build system.

Disk I/O statistics for hundreds of thousands of disks.

Resource monitoring for jobs run in Google’s data centers.

Symbols and dependencies in Google’s codebase.

Dremel builds on ideas from web searches and parallel DBMSs. Dremel’s architecture borrows the concept of a serving tree used in distributed search engines. Dremel provides a high-level, SQL-like language to express ad hoc queries. Dremel uses a column-striped storage representation, which enables it to read less data from secondary storage and reduce CPU cost due to cheaper compression.

Column stores have been adopted for analyzing relational data, but no one has extended the idea to nested data models.

This paper will cover the following contributions:

A novel columnar storage format for nested data.

Dremel’s query language and execution.

How are execution trees used?

Experiments on trillion-record, multi-terabyte datasets

Background

The paper takes a route of an example to explain the concepts. Let's dive deep into that.

Alice, an engineer at Google, develops a novel idea for extracting new signals from web pages. She runs an MR job on the input data. The output data with the new signals are stored in billions of records in the distributed file system.

To analyze the results of her experiment, she launches Dremel and executes several interactive commands. She finds an irregularity in one new signal and digs deeper by writing a FlumeJava code. Once the issue is fixed, she sets up a continuous pipeline to process input data. She formulates a few SQL queries on the pipeline output and adds that to a dashboard. Finally, she adds her new dataset to the catalog.

The above process requires interoperation between the query processor and other data management tools. The first ingredient for that is a common storage layer. We can use GFS for that. The second ingredient is shared storage format.

Columnar storage suits relational data, but we must make it work for the nested data model. How can it be done? Let’s see that in the next section of the Data model.

Data Model

The data model originated in the context of distributed systems called ‘Protocol Buffers’ is used. The data model is based on strongly-typed nested records. Let’s understand a few essential points around those.

Records consist of one or multiple fields.

Every field in a record has a name.

Every field in a record has an optional multiplicity label. Repeated fields (*) may occur multiple times in a record. They are interpreted as lists of values.

Optional fields (?) may be missing from the record. Required fields must appear exactly once.

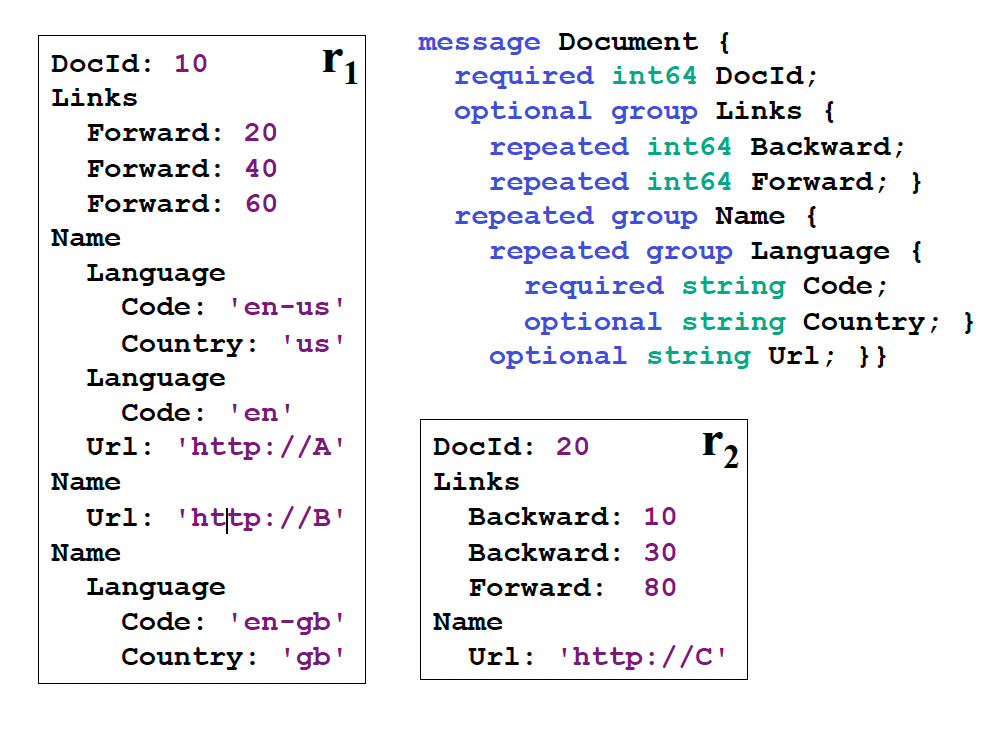

Let’s take an example to understand it better. See the diagram. In the diagram, a schema defines the record-type Document, representing a web document.

A Document has a required integer DocId and optional Links, containing a list of Forward and Backward entries holding DocIds of other web pages.

A document can have multiple Names, which are different URLs by which the document can be referenced.

A Name contains a sequence of Code and (optional) Country pairs.

There are two example records r1 and r2 shown in the diagram. Please note that the record structure in the schema is outlined using indentation.

The full path of a nested field is denoted using the usual dotted notation, e.g., Name.Language.Code.

This nested data model backs a platform-neutral, extensible mechanism for serializing/deserializing structured data.

Code generation tools can produce bindings for different programming languages.

Nested Columnar Storage

We understood the data model. Now the most crucial part is how it has to be stored. We aim to consecutively store all the values of a given field to improve retrieval efficiency.

In this section, we will cover three points:

Lossless representation of record structure in a columnar format

Fast encoding

Efficient record assembly

Representation - Repetition and Definition Levels

Dremel introduced the concepts of repetition and definition levels to represent a value’s level precisely and how it is repeated.

Repetition levels

Let’s take the example of the previous diagram. The field Code occurs three times in the record r1. To identify these occurrences, Dremel attaches a repetition level to each value.

The full path for the Code field is Name.Language.Code. This path has two repeated fields Name and Language. Hence, the repetition level of Code ranges between 0 and 2. Level 0 denotes the start of a new record.

Suppose we are scanning record r1 top down. When we encounter ‘en-us’, we have not seen any repeated fields, so the repetition level is 0.

When we see ‘en’, field Language has been repeated, so the repetition level is 2.

Finally, when we encounter ‘en-gb’, Name has been repeated most recently, so the repetition level is 1.

Thus, the repetition levels of Code values in r1 are 0, 2, 1.

Definition levels

Once again, let’s take the example from the previous diagram. The record r1 has no Backward links but field Links is defined (at level 1). To preserve this information, Dremel adds a NULL value with the definition level 1 to the Links.Backward column.

So the summary is - Each value of a field with path p, esp. every NULL, has a definition level specifying how many fields in p that could be undefined (because they are optional or repeated) are present in the record.

Encoding

Each column is stored as a set of blocks. Each block contains the repetition and definition levels.

NULLs are not stored explicitly as the definition levels determine them: any definition level smaller than the number of repeated and optional fields in a field’s path denotes a NULL. Definition.

Splitting Records into Columns

We understood the representation. Now the challenge is efficiently producing column stripes with repetition and definition levels.

The base algorithm for computing repetition and definition level is shown in the diagram below:

Let’s understand the algorithm. It recurses into the record structure and computes the levels for each field value using the while loop at line 5.

The algorithm creates a tree of field writers, whose structure matches the field hierarchy in the schema. The basic idea is to update field writers (line 14) only when they have their data.

Record Assembly

In this section, we will understand how to reconstruct the original records for a given subset of fields with all other fields stripped away.

Dremel builds the assembly using a finite state machine (FSM). The FSM reads the field values and levels for each field, and appends the values sequentially to the output records.

An FSM state corresponds to a field reader for each field we need in the output. State transitions are labeled with repetition levels. Once a reader fetches a value, FSM look at the next repetition level to decide what next reader to use. The FSM is traversed from the start to end state once for each record.

The above diagram shows an FSM that reconstructs the complete records in our running example. If only a subset of fields needs to be retrieved, we construct a simpler FSM that is cheaper to execute.

The above diagram shows an FSM for reading the fields DocId and Name.Language.Country.

Query Language

Dremel’s query language is based on SQL and is designed to be efficiently implementable on columnar nested storage.

Each SQL statement (and algebraic operators it translates to) takes as input one or multiple nested tables and their schemas and produces a nested table and its output schema.

The above diagram shows a sample query that performs projection, selection, and within-record aggregation. The query is evaluated over the table t = {r1; r2} from our running example.

Query Execution

Tree architecture

Dremel uses a multi-level serving tree to execute queries, as shown in the diagram below:

A root server receives incoming queries, reads metadata from the tables, and routes the queries to the next level in the serving tree. The leaf servers communicate with the storage layer or access the data on the local disk.

Consider a simple aggregation query below:

SELECT A, COUNT(B) FROM T GROUP BY AWhen the root server receives the above query, it determines all tablets, i.e., horizontal partitions of the table, that comprise T and rewrites the query as follows:

where

Each ith T1 is a disjoint partition of tablets in T processed by server i at level 1.

Each serving level performs a similar rewriting. Ultimately, the queries reach the leaves, which scan the tablets in T in parallel. On the way up, intermediate servers perform a parallel aggregation of partial results.

Query dispatcher

Dremel serves several queries which are executed simultaneously.

A query dispatcher schedules queries based on their priorities and balances the load. Its other crucial role is to provide fault tolerance.

Observations

Dremel scans quadrillions of records per month. The diagram below shows query response time distribution in a typical monthly workload of one Dremel system, on a logarithmic scale. Some queries achieve a scan throughput of close to 100 billion records per second on a shared cluster, and even higher on dedicated machines.

There are the following observations from the experiments:

Scan-based queries can be executed at interactive speeds on

disk-resident datasets of up to a trillion records.

Near-linear scalability in the number of columns and servers

is achievable for systems containing thousands of nodes.

Record assembly and parsing are expensive.

In a multi-user environment, a larger system can benefit from

economies of scale while offering a qualitatively better user

experience.

If trading speed against accuracy is acceptable, a query can

be terminated much earlier and yet see most of the data.

The bulk of a web-scale dataset can be scanned fast. Getting

to the last few percent within tight time bounds is hard.

Related Work

The MapReduce (MR) framework was designed to address the challenges of large-scale computing in the context of long-running batch jobs.

Like MR, Dremel provides fault-tolerant execution, a flexible data model, and in situ data processing capabilities.

The success of MR led to a wide range of third-party implementations like Hadoop.

Vendors like Aster, Cloudera, Greenplum, and Vertica offer hybrid systems that combine parallel DBMSs with MR. The HadoopDB is a research system in this hybrid category.

It is conceivable that parallel DBMSs can be made to scale to thousands of nodes, but there is no such published work. Also, there is no prior literature studying MR on columnar storage.

Many commercial DBMSs support the storage of nested data using XML. One system that uses columnar XML representation is XMill.

A recent SQL-like language operating on nested data is Pig.

Other systems for parallel data processing include Scope and DryadLINQ.

Conclusions

Dremel is a distributed system for the interactive analysis of large datasets. Dremel is a custom, scalable data management solution built from simpler components.

Dremel ecomplements the MapReduce paradigm. Experiments show that it performs excellently even for trillion records of multi-terabyte datasets of real data.

In the future, the team is planning to cover formal algebraic specifications, joins, extensibility mechanisms, etc.